INTERVIEW: Polysemanticity w/ Dr. Darryl Wright

Darryl and I discuss his background, how he became interested in machine learning, and a project we are currently working on investigating the penalization of polysemanticity during the training of neural networks.

Chapters

01:46 - Interview begins

02:14 - Supernovae classification

08:58 - Penalizing polysemanticity

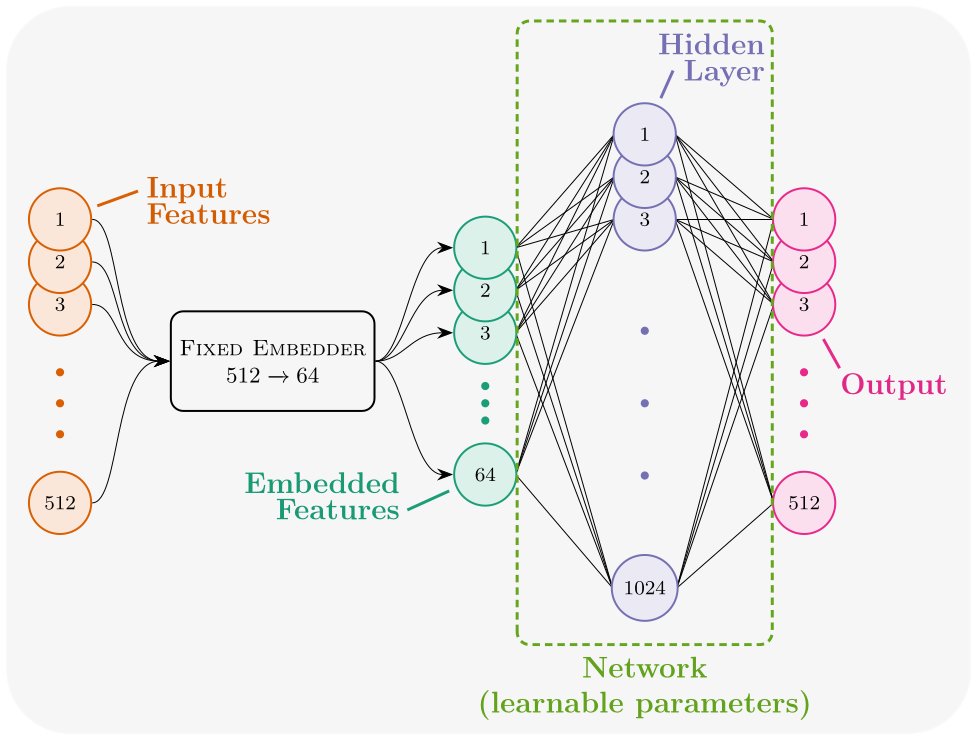

20:58 - Our “toy model”

30:06 - Task description

32:47 - Addressing hurdles

39:20 - Lessons learned

Links

Links to all articles/papers which are mentioned throughout the episode can be found below, in order of their appearance.

- Zooniverse

- BlueDot Impact

- AI Safety Support

- Zoom In: An Introduction to Circuits

- MNIST dataset on PapersWithCode

- MNIST on Wikipedia

- Clusterability in Neural Networks

- CIFAR-10 dataset

- Effective Altruism Global

- CLIP Blog

- CLIP on GitHub

- Long Term Future Fund

- Engineering Monosemanticity in Toy Models

Comments