MINISODE: “LLMs, A Survey”

Take a trip with me through the paper Large Language Models, A Survey, published on February 9th of 2024. All figures and tables mentioned throughout the episode can be found on the Into AI Safety podcast website.

Chapters

00:36 - Intro and authors

01:50 - My takes and paper structure

04:40 - Getting to LLMs

07:27 - Defining LLMs & emergence

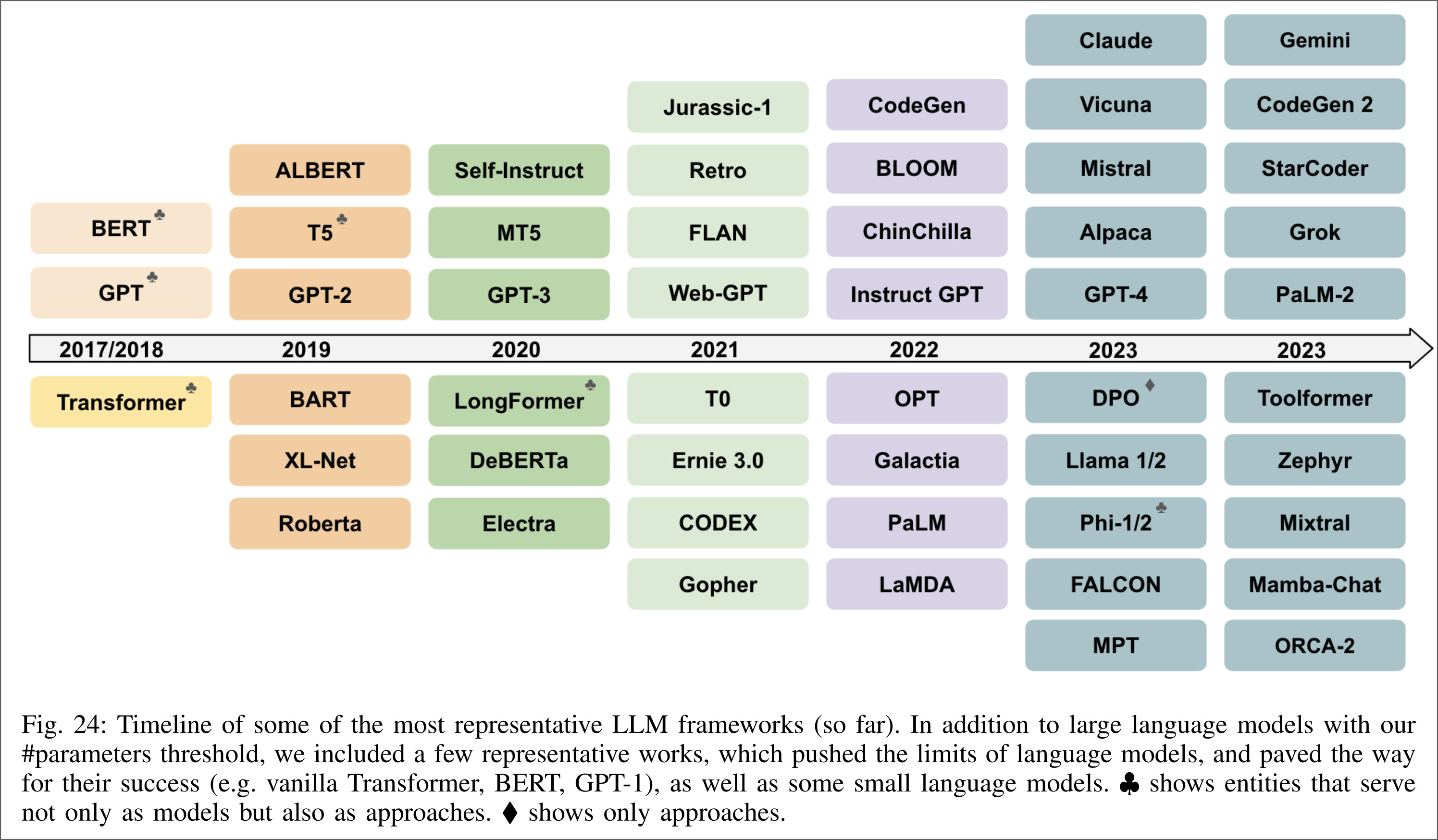

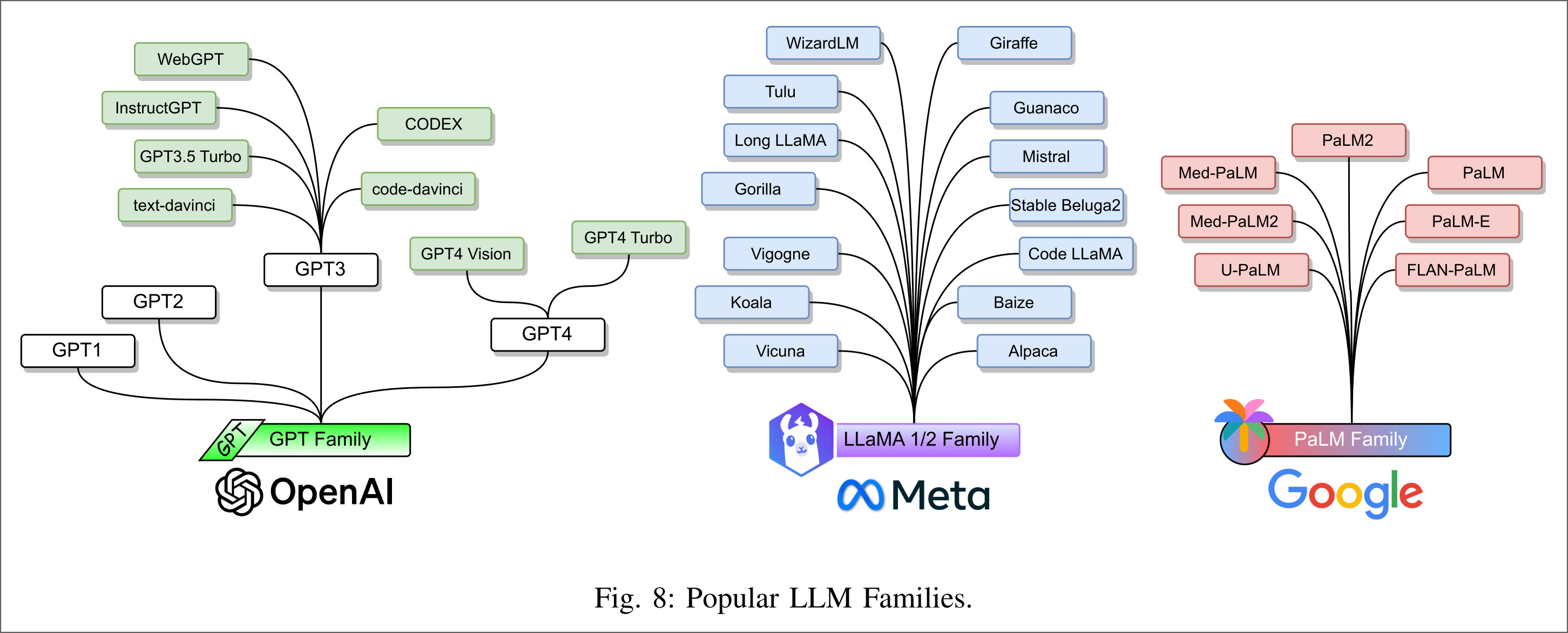

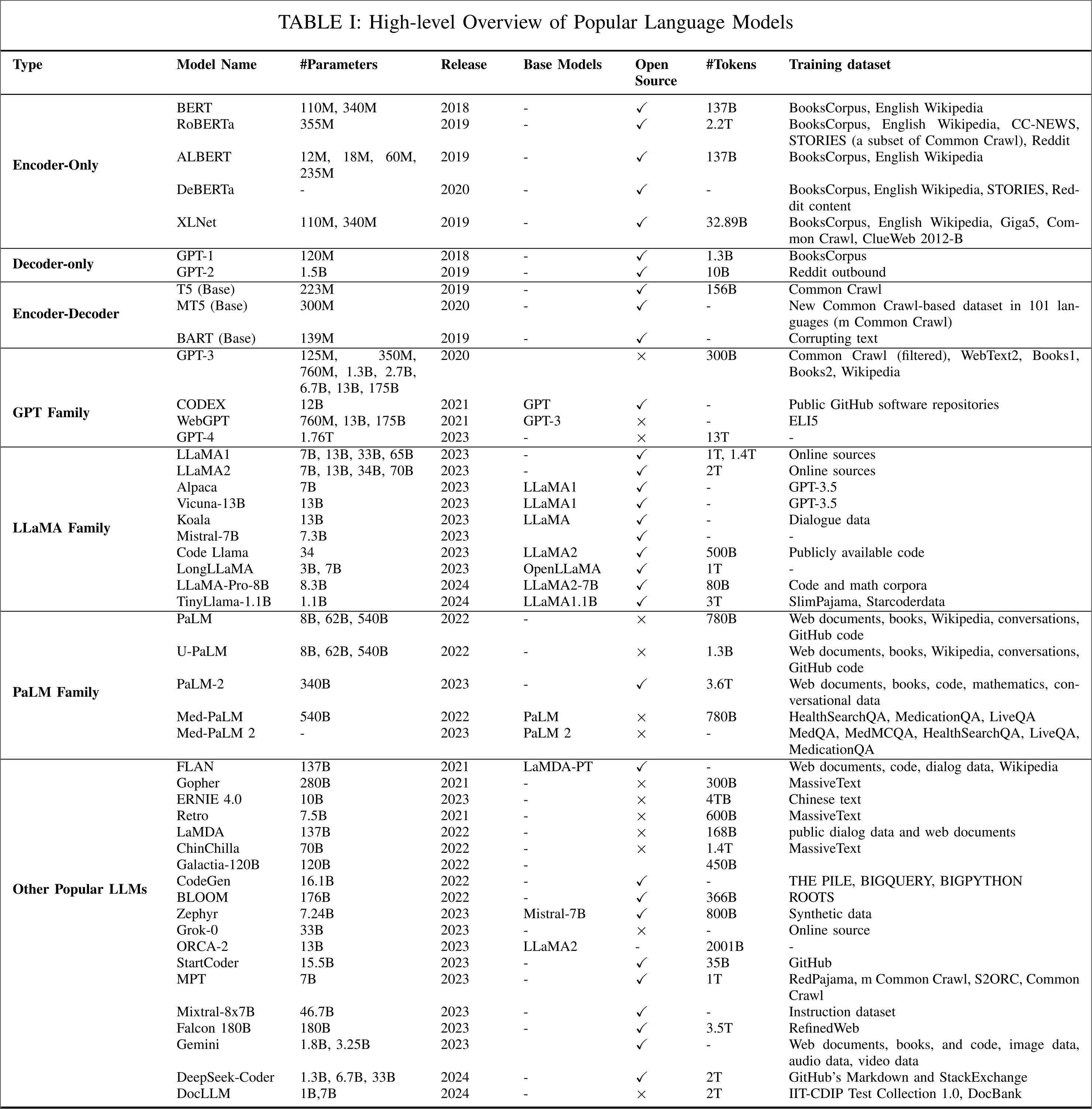

12:12 - Overview of PLMs

15:00 - How LLMs are built

18:52 - Limitations if LLMs

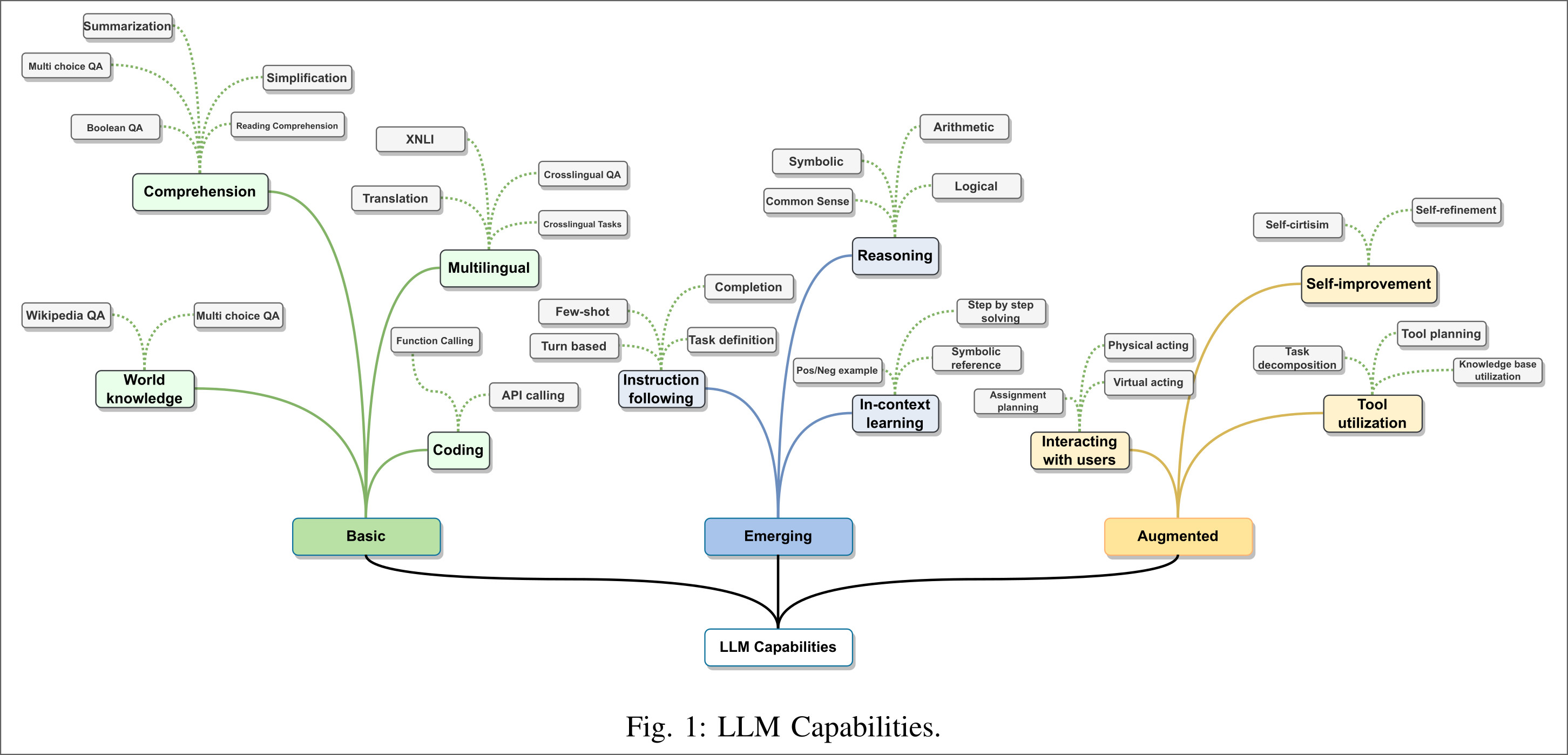

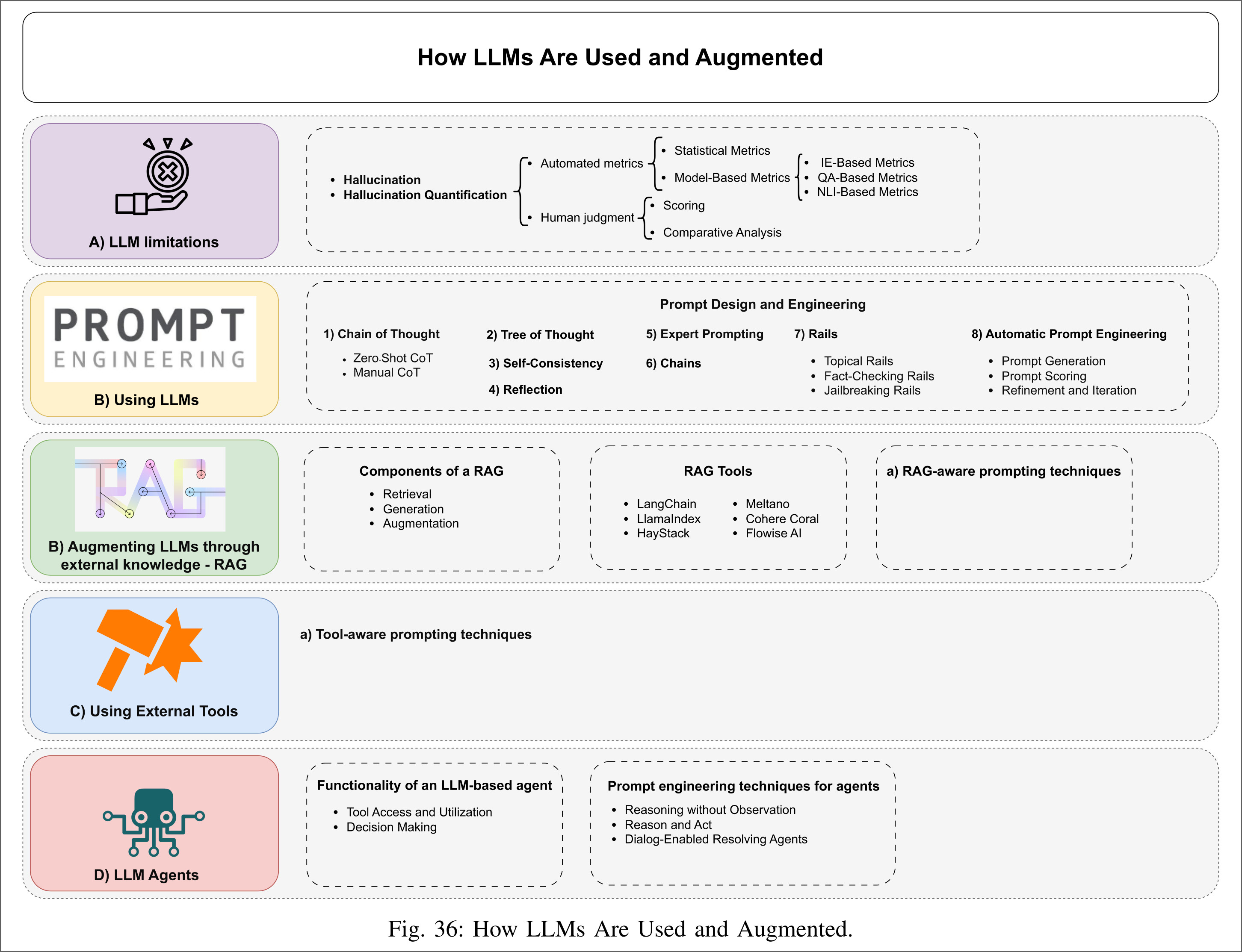

23:06 - Uses of LLMs

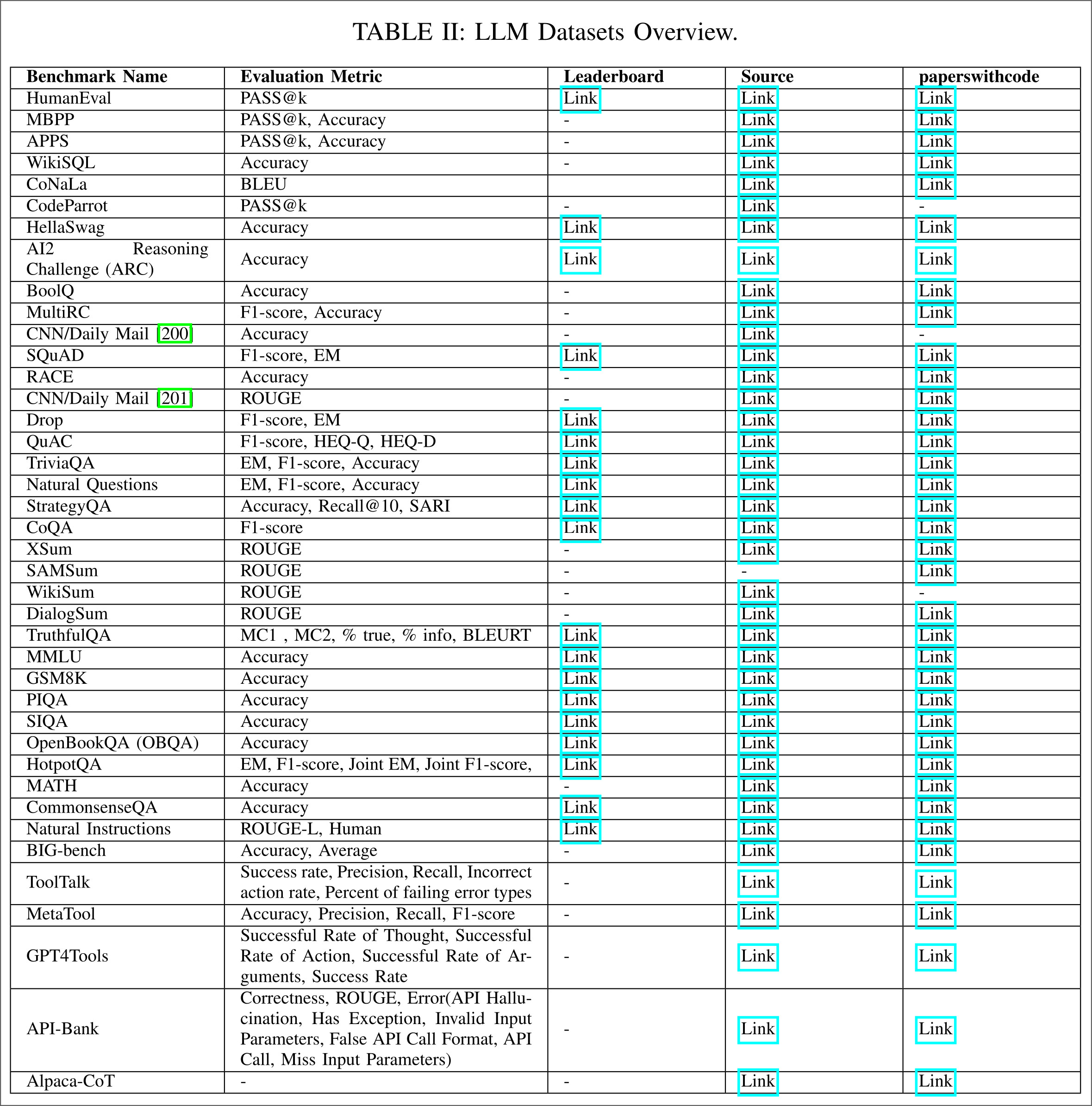

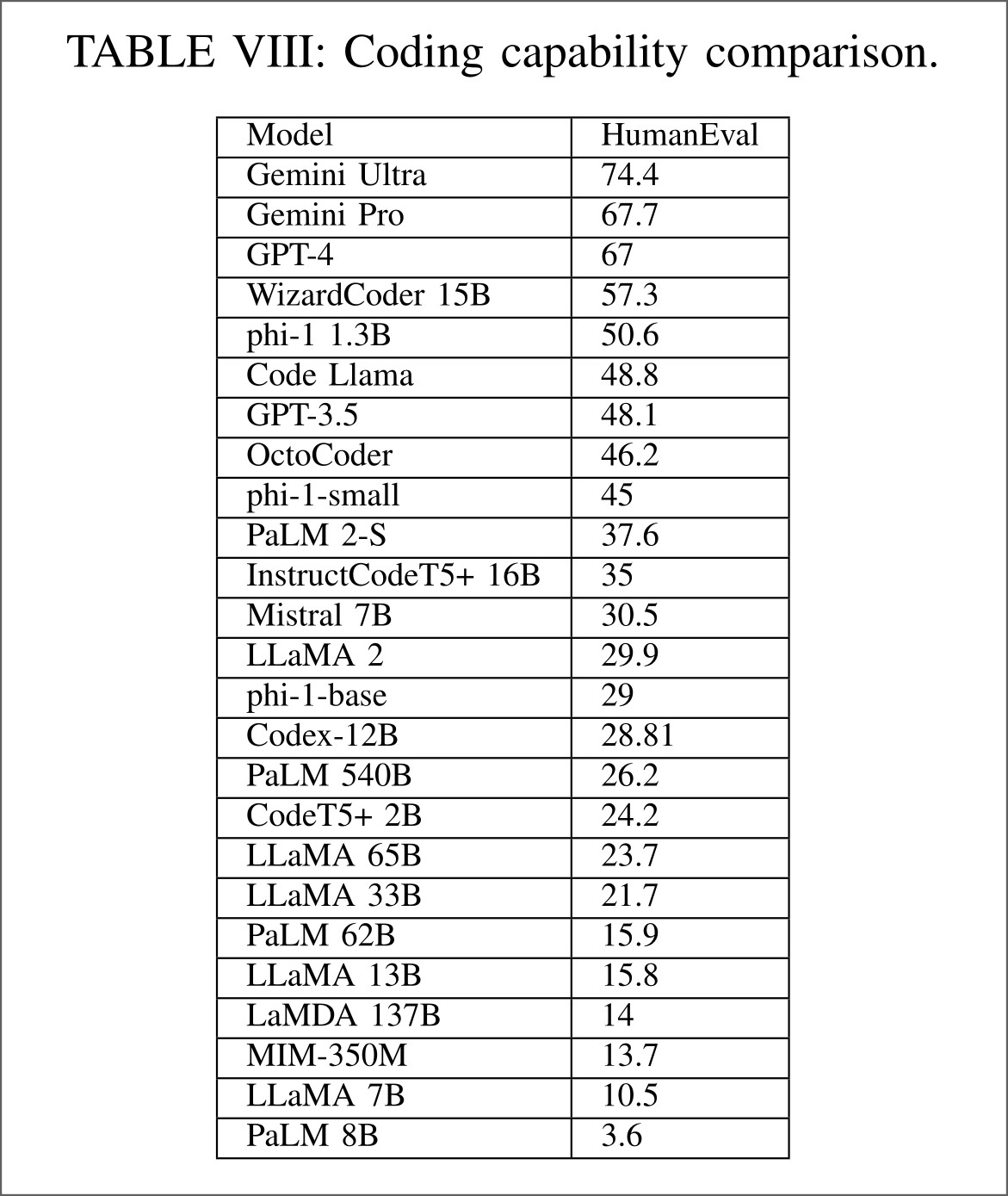

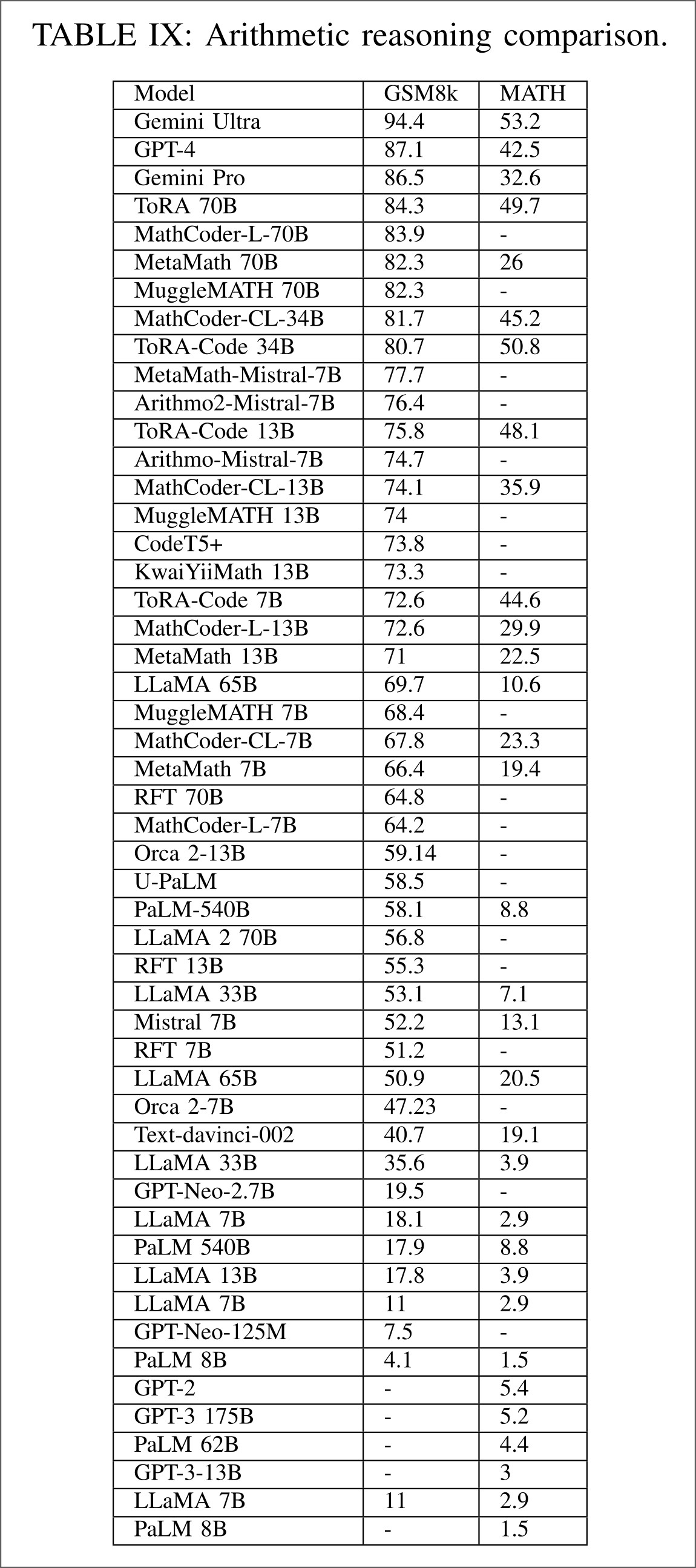

25:16 - Evaluations and Benchmarks

28:11 - Challenges and future directions

29:21 - Recap & outro

Figure and Table Gallery

Links

Links to all articles/papers which are mentioned throughout the episode can be found below, in order of their appearance.

- Large Language Models, A Survey

- Meysam’s LinkedIn Post

- Claude E. Shannon

- Future ML Systems Will Be Qualitatively Different

- More Is Different

- Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training

- Are Emergent Abilities of Large Language Models a Mirage?

- Are Emergent Abilities of Large Language Models just In-Context Learning?

- Attention is all you need

- Direct Preference Optimization: Your Language Model is Secretly a Reward Model

- KTO: Model Alignment as Prospect Theoretic Optimization

- Optimization by Simulated Annealing

- Memory and new controls for ChatGPT

- Hallucinations and related concepts—their conceptual background

Comments